Android

Early on in my career, I aspired to be an Android developer. In my early 20s, I recognized the rapidly growing mobile market, and largely taught myself Java (poorly) in order to jump on the hype train. I made a name for myself by releasing Ventriloid, and continued to pursue side projects that targeted Android, Chromecast, Glass, and other Google platforms for several years thereafter.

I was a Google fanboy, and managed to attend Google IO in 2016. Later that year, I managed to secure a job at Google, working with the Wear OS (Android Wear, back then) "partner engineering" team - a team of developers that work with external manufacturers to bring new Android smart watches to market. To have made it through the interview process was extremely validating to me as a programmer and an Android expert, and to actually work at Google was a dream come true and a life goal achieved.

However, something about the magic was lost. I only worked at Google for three months before deciding to move back to Georgia to be with my family. I wasn't "let go" for any performance reasons or otherwise. In fact, the on my last day with the company, I was given a small raise to celebrate my 90th day - a sentiment that my manager found quite funny, which I suppose represented something I disliked about working there. Why give someone a raise on their last day? The answer is simple: the process was already underway before I decided to put in my notice, and nothing was going to stop that train. Big companies have a lot of "processes".

It's not that I hated my time there; on the contrary, it was an incredible place to work. They gave me all the resources I could possibly ask for with very little red tape, and equally little guidance. For someone like me, it seemed like it would be a perfect fit. There just seemed to be such a bit disconnect between developers like myself and upper management, and the gap between us was filled with automated processes. I was just a small cog in a giant wheel with absolutely no real agency over the direction of the company.

After I left Google, I stopped upgrading my phone every year, I stopped attending tech conferences, and I stopped investing my time into whatever new products came out. In hindsight, that's about the time all of my side projects started being more oriented around video games. A game is its own product that can be released on whichever platforms suit it best.

My "life goal" at this point is to support myself by building my own products, not as a cog in someone else's wheel. Until I can manage to do that, I much prefer the influence I have over the direction of small companies. Every single person at my current company makes such a large impact on the success of our products, and I can't fathom how anyone would want it any other way.

23.1 The Application Shell

I haven't worked much with Android since I left Google, but not much has changed. Everything is built and deployed using Android Studio, similar to how Apple insists on the usage of Xcode. In hindsight, I regret avoiding Xcode so adamantly - I've wasted a lot of time trying to get CMake and CLion do what Xcode already does out-of-the-box. I'll use a different strategy this time, and if it works out, I may spend some time retrofitting Scampi to work in a similar way.

Android Studio's project creation dialog has a nice selection of various templates available, including an option for a "Game Activity (C++)". I'll create a test project and just browse around the structure a bit. Unsurprisingly, the project uses Gradle as its build system. This has been the case for Android for a long time now, though Android veterans like myself might recall a time when it used Apache Ant, which is how Ventriloid was built. In fact, Android Studio (or even JetBrains IntelliJ IDEA) wasn't even available yet, so the Android SDK integrated with the Eclipse IDE. Unfortunately, the instructions to build Ventriloid contain links that have since been replaced by modern versions of the Android SDK, so it would take a lot of work to actually get it to run these days, but I digress.

The template uses the externalNativeBuild directive of the Gradle android plugin to trigger a native build of a CMake project, located within the project's app/src/main/cpp directory. The actual application's entry point is written in Kotlin, but it appears to do almost nothing except load the native library built by CMake. The actual native library has its own entry point: void android_main(struct android_app* app). From here, the code registers callbacks for various application lifecycle events, and does regular game-loop things (polls for system events and renders to the screen in a loop). The number of files in the entire project is split about 50/50 between actual C++ code and various configuration files (for the Gradle build system and Android-specific XML resource files).

I built and launched the template app within a Pixel 3a emulator (configured by default in this version of Android Studio), and was greeted by a blue background with a green Android head rendered in the center. Looking in the Renderer.cpp file, it appears that this is just done with the very standard OpenGL ES 3.0 functions that we've been using for Pesto - that's really promising!

I think I've seen enough. Let's give it a shot.

Carbonara

Like with all of the platforms, I'll need a codename that is a pasta dish that can be prepared using linguine pasta. I looked up a bunch of recipes and decided to go with "carbonara", which I've never actually had before, but I am going to Italy next month, so maybe I'll have a chance!

I used Android Studio's "Game Activity (C++)" template to create another new project named carbonara, located within a subdirectory of the Git repository. My hope is that I will be able to open this directory in Android Studio, while still referencing the CMake project of its parent directory. This should allow me to keep the engine and game code shared, while getting the full benefit of the Android-specific tooling provided by Android Studio. I hate Xcode, but I have found myself using its tooling for Metal debugging and configuring provisioning profiles, so I wish I had just used this sub-project model from the beginning, rather than spending a bunch of time writing scripts that just invoke Xcode commands anyway.

I left most of the files created by the template in-place, but moved the CMakeLists.txt file up to the root of the carbonara directory, updated its contents to point to the files contained within the app/src/main/cpp directory, and updated the top-level CMakeLists.txt file to include a branch for Android builds.

CMakeLists.txt snippet

if (APPLE)

...

elseif (EMSCRIPTEN)

...

elseif (ANDROID)

add_subdirectory(carbonara)

add_subdirectory("${AUDIO_DIR}/openal")

add_subdirectory("${RENDERERS_DIR}/opengl")

endif()

I updated the externalNativeBuild in Carbonara's app/build.gradle.kts to point to the top-level CMakeLists.txt and refreshed the Gradle project in Android Studio. While the relative file locations worked correctly, the project couldn't refresh correctly because the OpenGL and OpenAL packages could not be found. Within the renderers/opengl/CMakeLists.txt and audio/openal/CMakeLists.txt files, we required those packages with lines like find_package(OpenGL REQUIRED). The template project's CMakeLists.txt just links to GLESv3 directly without having to find any package at all. I ripped the find_package() directives (and subsequent link and include directives) out of the renderer and audio CMakeLists.txts, and moved them directly into Pesto's CMakeLists.txt. Apparently the way we link to OpenGL and OpenAL is platform-specific, so we'll just have to handle it on a per-platform basis. The template project doesn't link to any audio library, so I'll just have to handle that later. With this configuration, the app compiled and launched in the emulator exactly as it did in the original template project.

I spent a while stubbing out the different platform abstractions in the app/src/main/cpp/platform directory, similar to what I've done for every other platform thus far.

- I extracted the native polling logic (achieved via

ALooper_pollAll()) into theAndroidInputManager. AndroidTimeManageris based on theclock_gettime()function.AndroidLoggerutilizes__android_log_print().AndroidOpenGLFileLoaderalways returns an empty image.AndroidLifecycleManagerdoesn't do anything.AndroidSaveManagerjust returns default values with no persistence.AndroidLeaderboardManageris disabled for now, just like Pesto's.AndroidAudioManageris an empty stub that I'll delete once I get OpenAL working.

In the main.cpp, I constructed a new Engine with the Android platform implementations and the OpenGLRenderer upon receiving the APP_CMD_INIT_WINDOW command, and destroyed it upon receiving the APP_CMD_TERM_WINDOW command. The android_main() function then just loops while the application isn't being destroyed, calling tick() on the Engine if it exists.

When I tried to compile this, I was greeted with a few compilation errors that all boiled down to missing inclusions - a missing #include <vector> here, a missing #include <unordered_map> there. Easy fixes, and funny to me that some compiler toolchains are pickier than others.

Unfortunately, even after fixing the compilation errors, the app would just hang on the splash screen, like the Engine was never getting tick()'d. After fiddling around for a while, I discovered that the lifecycle events (such as APP_CMD_INIT_WINDOW and APP_CMD_TERM_WINDOW) rely on the native polling logic to occur, which makes perfect sense. Unfortunately, the polling logic happens in the AndroidInputManager, which doesn't get created (or polled) until the Engine is created, which doesn't happen until the APP_CMD_INIT_WINDOW command is received!

I decided to move the native polling logic into a static pollNativeEvents() method of the AndroidInputManager. The AndroidInputManager's regular pollEvents() method just calls the static pollNativeEvents() method, and the loop in android_main() will also call the static method if the Engine hasn't been created yet. It's not the cleanest solution, but it works just fine.

I managed to get past the splash screen this time, but the app crashed almost immediately, with error logs indicating some unknown failure compiling one of the vertex shaders on startup. I felt silly, but it was obvious that I had completely neglected to create the OpenGL context for the application! The template code uses EGL to handle context and surface creation, so I decided to copy that code and slap it in the APP_CMD_INIT_WINDOW command handler, prior to constructing the Engine.

Well, that fixed the shader errors, but the app was still crashing, this time with an error stating "Unable to match the desired swap behavior". I spent way too long Googling the error before deciding to check what I was doing differently from the template project. I finally narrowed it down to calling eglSwapBuffers() after each tick! Apple's MetalKit framework handles this for us, as does Emscripten's main loop logic, so I had completely forgotten about it!

Once again, the app was crashing, complaining about sampling invalid texture coordinates. It dawned on me that I never called the Renderer's resize() method, so naturally the off-screen buffer sampling was broken. The template project checks if the screen size has changed every frame, so I guess I'll do that too. When building Pesto, I had added an _isFirstFrame flag to the OpenGLRenderer to prevent sampling the off-screen buffer before the resize had happened. I extended this check to prevent sampling at all if the width or height of the Viewport is zero.

Finally, some success!

Obviously the text rendering doesn't work without a functional OpenGLFileLoader. The dimensions of the viewport are strange though. Looking through the template project, it looks like they call glViewport() on any resizes, so I added that to the OpenGLRenderer, and it looks much better! I also tested Pesto to make sure it still functioned as intended.

Cleanup

I decided to extract everything into an ApplicationAdapter class, containing a single tick() method. Eventually, it will handle the Android lifecycle events as well, but it just feels cleaner this way for now.

The template project contained a bunch of #includes of various .c, .cpp, and .hpp files, which effectively means we were embedding other source files into our own source file. I managed to find this article describing methods for integrating the games-activity library. The template project includes the shared library by default, but linking to the static library allows us to avoid embedding most of those source files into our own code. I was getting an UnsatisfiedLinkError after switching to the static library, but Google was one step ahead of me, providing the solution to that issue.

I spent a little bit of time configuring my CLion with a Debug-Android CMake profile, which allows me to work on the Android native code from CLion using my already-configured code styling profile, and even build the library (though not the app) to check for compiler errors. The Android build system appears to be using Prefab to compile and cache the native libraries that I'm linking to (game-activity, specifically). In order for my CMake project to compile in CLion, I'll need to tell it where the artifacts for Prefab are located. Here are the CMake options that I've configured in CLion, though I make no promises that they would look the same on your system:

-DCMAKE_TOOLCHAIN_FILE=/Users/jdriggers/Library/Android/sdk/ndk/25.1.8937393/build/cmake/android.toolchain.cmake -DANDROID_ABI=arm64-v8a -DCMAKE_FIND_ROOT_PATH=/Users/jdriggers/CLionProjects/linguine/carbonara/app/.cxx/Debug/235h4r5a/prefab/arm64-v8a/prefab

I dug up my old Pixel 3 XL (my previous phone, before buying my iPhone 13 Pro). I had to let it charge for a little while, but after it turned on, I was able to plug the phone into my computer and start up the app without any further changes - I'm insanely proud of that! Here's the commit containing the new application shell.

23.2 Input Management

The AndroidInputManager is the only thing standing in our way from making the game actually playable on Android, so that's what I want to tackle first. Hopefully we can handle everything from the native code without having to deal with JNI translations, which can be quite expensive - and very ugly!

I started by once again inspecting how the template project handled inputs, and did a bit of research into other options for handling inputs using the native game-activity library. It appears that the library allows you to retrieve a buffer of inputs that have already been marshalled over JNI for us. While it's nice that I don't have to deal with the JNI code, it kind of sucks that it has to use JNI at all - ideally the native activity could have direct access to the hardware inputs, but I'm not entirely surprised by this reality.

Even more disappointing is the lack of a native GestureDetector implementation. I can either re-implement the existing Java version in C++ (which could take substantial amounts of effort and provide a large surface area for bugs), or I can just use the Java API and notify the native library of any gestures over JNI.

Touch Events

In any case, I'll just start by implementing the basic functionality for the getTouches() method. I copied the input handling loop from the template project and started widdling it down for my own needs. The API is remarkably similar to the one for iOS, containing events for "down", "up", and "moved" inputs. Because I'm polling these events directly instead of reacting to callbacks, I don't need to add them to a "pending" data structure - I can just add them directly to the "active" data structure.

Of course I didn't get it right on the first try. The app crashed in the GestureRecognitionSystem when I tried to press the "Play" button, having received an invalid entity ID from the Renderer. This always means I've screwed up the coordinate system. After adding a breakpoint to inspect the coordinates that I'm requesting from the Renderer, it looks like I'm asking for pixel coordinates instead of normalized coordinates. Woops.

I added a reference to the Viewport as a dependency to the AndroidInputManager so that I can convert the pixel coordinates to a normalized form. This time, the app didn't crash, but the screen was reacting as if the vertical coordinates were upside-down. Obviously I need to invert the Y coordinate of the inputs.

I'm very sleepy and accidentally just negated the Y coordinate instead of inverting it, causing the app to crash again. An easy fix, but I'm probably pushing myself too hard. I'm already invested in getting this to work before going to sleep though, so I will press on...

The app now seems to only be reacting to the "down" events. I added some logging, pulled out some hair, and realized that I was trying to insert() into the std::unordered_map of active inputs during the "up" and "move" events using an ID that was already in the map. I changed the calls to modify the existing entry instead of trying to insert again, and buttons now work beautifully!

Swipe Events

I did a little bit of reading about things that have changed with JNI since the last time I used it for Ventriloid back in 2010. Basically nothing has changed, but Android has added some nice optimizations for specific use cases. Since swipe events don't require any parameters or return values, we can use the @CriticalNative annotation on our native methods to reduce the performance overhead.

I created a new Native Kotlin class that contains the external JNI functions onLeftSwipe(): Void and onRightSwipe(): Void. Android Studio automatically detected that those functions don't exist in my C++ library and offered to create them for me - neat! It created two functions with painfully long signatures:

JNIEXPORT jobject JNICALL Java_com_justindriggers_carbonara_Native_onLeftSwipe()JNIEXPORT jobject JNICALL Java_com_justindriggers_carbonara_Native_onRightSwipe()

Yes, JNI is disgusting.

I'm surprised that it defined the return type as jobject instead of void. Whatever, I still have some more boilerplate to whip up.

I created a private CarbonaraGestureDetector class, inheriting from SimpleOnGestureListener, within my MainActivity.kt file. I created a member variable to hold an instance of the new class, instantiated it within the onCreate() method, and invoked its onTouchEvent() method within an overridden implementation of the Activity's onTouchEvent().

carbonara/app/src/main/java/com/justindriggers/carbonara/MainActivity.kt snippet

override fun onTouchEvent(event: MotionEvent): Boolean {

return gestureDetector.onTouchEvent(event) || super.onTouchEvent(event)

}

The GestureDetector interface contains methods for handling different types of gestures, but there is no "swipe" gesture handler. I guess onFling() is supposed to be similar, so let's give that a shot. I implemented the method within our implementation, using Google for some guidance on how to determine the swipe direction from the provided events and velocities, and added some logs to the blocks that handle the swipes.

That seemed to work, so I decided to invoke the native methods from the onFling() method, and added some logs to the native implementations. The app crashed as soon as I tried to swipe. Yikes. It's very late, but I can't stop now.

The reported error seems to complain about returning data from the native code to the Java implementation. Naturally, I assumed it had to do with the jobject return types, so I changed those to void instead. Unfortunately, JNI now can't find the functions at all! I tried converting the native functions to use the RegisterNatives() function instead, but that didn't work either.

I finally converted the Native.kt Kotlin class into a Native.java Java class instead - mostly because I felt like I was out of ideas - and it freaking worked. What did I miss?

Ah. The Void return type of those methods caused JNI to expect an object to be returned, but Kotlin expects no return type for Void functions.

I reverted the Java conversion and removed the return type from the methods entirely, and it continued to work. I also reverted the RegisterNatives() mechanism (against the recommendation of the linked documentation) because the weirdly long arbitrary function signatures are still less code than using JNI_OnLoad().

The swipe events are now being logged from the C++ implementation, but I need to actually wire things up to the AndroidInputManager. Unfortunately, the JNI calls are made in a static context - there is no "object" associated with these functions. I whipped up some static variables to the AndroidInputManager, modifying them from the JNI functions, and "polling" them in the pollEvents() method, resetting the static variables to false afterward.

After some sleep-deprived thrashing with how static variables work inside of .cpp files, I finally got it working. The game can be played on Android!

The actual behavior sucks. If the GestureDetector consumes any given event, then that event doesn't get forwarded to the underlying GameActivity. This leads to some really weird behavior where the app doesn't respect touches that might be part of an ongoing gesture. I changed the onTouchEvent() method so that it always forwards the event to the GameActivity, regardless of the gesture detection.

carbonara/app/src/main/java/com/justindriggers/carbonara/MainActivity.kt snippet

override fun onTouchEvent(event: MotionEvent): Boolean {

gestureDetector.onTouchEvent(event)

return super.onTouchEvent(event)

}

That feels better, but it's still not right. The game is playable, but the inputs feel very very slow. I'm happy enough with that for now. I'm going to sleep.

Better Swipe Events

After some much needed rest, I decided to do a side-by-side comparison of the game on my Pixel 3 XL and my iPhone 13 Pro. The iOS version is obviously much more responsive, and Android has a reputation for having more input lag than iOS, but the difference is way more than I would expect.

After some careful examination, the thing that stands out to me the most is that the iOS UISwipeGestureRecognizer appears to fire as soon as a swipe gesture is detected, even if the gesture hasn't completed yet. Android's onFling() method, on the other hand, only fires once the user has lifted their finger off of the screen to complete the gesture. I wonder how much this single difference in behavior affects the perceived responsiveness of Android apps, compared to their iOS counterparts.

I obviously don't have to use the onFling() callback to trigger my swipes. The MotionEvents are all provided directly to me, so I could parse them manually. I could even do that from the native side using the GameActivityMotionEvents.

I did some Googling and found a few different solutions. There is an ndk_helper library floating around that parses native motion events into gestures, though it doesn't use the GameActivityMotionEvents, nor does it detect swipe events. There's also an AndroidUIGestureRecognizer library, written by sephiroth74, that aims to replicate the iOS swipe behavior on Android, though it doesn't do it natively, so I'd have to continue using JNI functions whenever the events are detected. There are obviously plenty of other options, but those are the two that stood out the most to me.

I went back and forth over whether or not I care enough to write this behavior "from scratch". I take a lot of pride in having built this game from the ground up, but realistically, that's not entirely true. I've taken a lot of shortcuts, and it would be completely unreasonable to truly build things entirely from scratch. Here are a list of things that I did not build:

- GLM (the math library used by the entire engine)

- STB (used to load image files in Pesto)

- ios-cmake

- OpenGL, Metal, and other possible graphics libraries

- OpenAL, AudioEngine, and other possible audio libraries

- Clang

- The drivers that our platforms require for my code to interact with the hardware

- The actual hardware of those platforms

You get my point. Even console manufacturers with first party titles cannot claim that they built every aspect of their systems from scratch. At some point, they are reliant on some pre-existing chip or manufacturing process that was developed by another company.

So I have successfully talked myself out of writing this dumb gesture recognition code from scratch. I added the AndroidUIGestureRecognizer dependency to my Gradle configuration, and I was able to integrate the UIGestureRecognizerDelegate into our MainActivity class very easily.

I just have to take a moment to give some praise to this library. It might not be 100% true to Apple's implementation, but it's definitely very close, and the game feels great on my Pixel 3 XL.

Cleanup

The static variables in the AndroidInputManager are gross, so I'd like to get rid of them. Using static variables in this class effectively means there can only ever be one instance of it. Theoretically, that should always be true, but there are better design decisions that can be made.

I added a new C++ SwipeListener interface to a new jni subdirectory, and updated the AndroidInputManager to implement that interface. I ripped out all of the static variables, and just used the same "pending"/"active" type of setup that we used in the IosInputManager.

Then I created a new Callbacks file in the jni directory. This file does not declare a class, but contains two global functions: registerSwipeListener() and unregisterSwipeListener(). The implementations of these functions add/remove a pointer to a SwipeListener to/from a global std::set. I moved the JNI functions from the main.cpp file into the Callbacks.cpp file, and changed their implementations to iterate over the pointers in the set and invoke the appropriate function of each SwipeListener. Finally, in the AndroidInputManager's constructor and destructor, I called the add/remove functions using this.

The end result is the same functionality, but a much cleaner and scalable way for the platform abstractions to interact with the JNI functions.

When I tried to run the game in the emulator, I was greeted by an error stating that critical native methods must be static. Kotlin doesn't have a "static" keyword, but you can define a companion object within your class, which is effectively static. I did so, and moved the methods into the companion object, but the names of my C++ JNI functions had to change:

JNIEXPORT void JNICALL Java_com_justindriggers_carbonara_Native_00024Companion_onLeftSwipe()JNIEXPORT void JNICALL Java_com_justindriggers_carbonara_Native_00024Companion_onRightSwipe()

If it weren't for Android Studio generating those for me, it would have taken me ages to figure out the _00024Companion part.

The app managed to start up, but actually attempting a swipe in the emulator caused another crash with the same error. After some more Googling, I found a @JvmStatic annotation, which made me change the signatures of the JNI functions back to the way they were before!

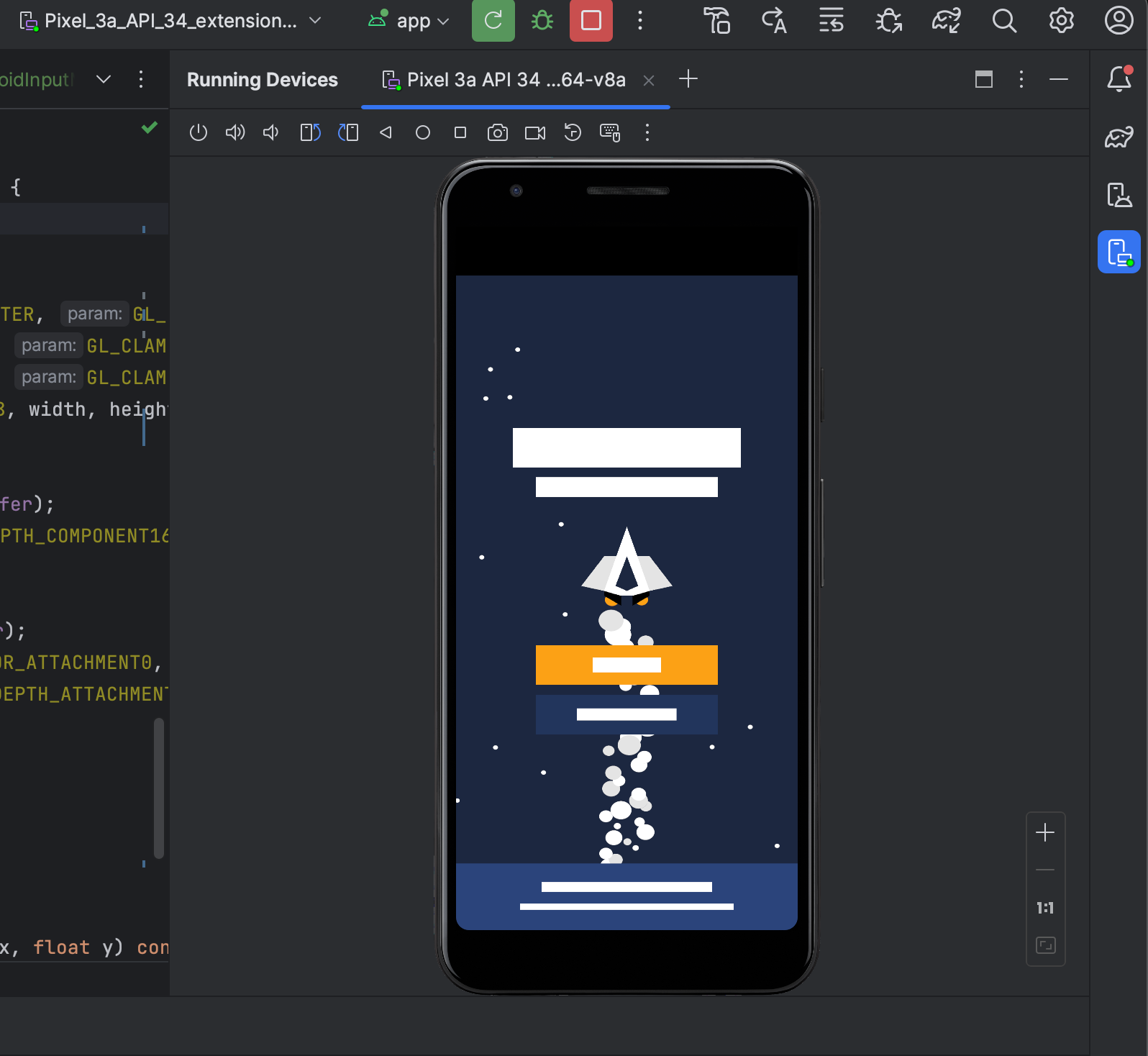

This time, the game finally works in the emulator!

The changes are surprisingly minimal, considering how much time I spent on making them.

23.3 Graphical Glitches

With user inputs being recognized and the game in a playable state, I noticed some graphical anomalies within the InfiniteRunnerScene. Specifically, the semi-transparent backgrounds of the UI elements don't seem to be rendering correctly.

Ignoring the text rendering (which is broken because we haven't implemented the AndroidOpenGLFileLoader yet), the background color of the "level" indicator is more of a semi-transparent white than black. The backgrounds of the score and shield indicators have some weird artifacts, as if they are on top of other parts of the screen. These issues don't occur in the emulator, just on my physical device.

I tried installing the Android GPU Inspector, but unfortunately my Pixel 3 XL is not supported, so I'm flying blind here. I tried narrowing down which FeatureRenderers were responsible, but managed to experience even weirder errors, since the ordering of them is pretty sensitive.

I couldn't find anything out of place, so I tried experimenting with different options for the glBlend() command. To my surprise, this code actually worked:

renderers/opengl/src/OpenGLRenderer.cpp snippet

I verified the change through the Android emulator, and Pesto as well, just to make sure I hadn't unintentionally broken something. I'll be honest, I'm not exactly an expert on rendering, I'm just glad it's working now.

I fixed up some of the compiler warnings in the renderers/opengl folder, but none of the other changes actually affected the functionality of the code. I also realized I was requesting a depth buffer from EGL (because I absent-mindedly copied and pasted what was in the template project), even though our main framebuffer doesn't require it, so I removed it.

23.4 Image File Loading

Android packages are typically built with an assets/ subdirectory within the app/src/main/ directory, containing all the files that the app will require at runtime. We actually already have an assets/ directory at the root of our project, but not all of the files in that directory need to be bundled into the Android app (namely the .ai file containing the app icon vector paths).

We have a couple of different options. We could configure our CMakeLists.txt to copy the desired files from the root assets folder into the Android-specific assets folder, and perhaps add a .gitignore entry for the Android assets folder. This option couples the asset dependency with the library dependency, and while it's unlikely to cause any actual issues, I really don't like that.

Alternatively, we can configure the build.gradle.kts such that the Android build uses specific parts of the root assets directory instead. I think we'll do that:

carbonara/app/build.gradle.kts snippet

android {

...

sourceSets {

named("main") {

assets.srcDirs(

"../../assets/audio/",

"../../assets/fonts/"

)

}

}

}

This configuration will build the Android app as if the files within the assets/audio/ and assets/fonts/ directories were directly in the Android assets directory instead!

The Asset Manager

Loading files from an Android app isn't as simple as using the standard library to load files from the filesystem - instead, we must go through the AssetManager. When I was first pondering about how an Android build would work, I was worried that I'd have to load the files into memory from Java/Kotlin and transfer the bytes over JNI to the native library. Luckily, the template project contains an example using the native AAssetManager_open() function instead!

I was able to copy much of the template implementation for file handling into the AndroidOpenGLFileLoader. Not only does it open the file contained within the assets directory, but it also decodes the image into a buffer using a variety of AImageDecoder_* functions.

Just like the implementations in Alfredo, Scampi, and Pesto, I've hard-coded Carbonara's AndroidOpenGLFileLoader to only load the font.png file. If the day comes where I need to be able to load different image files, then I will refactor all of them.

carbonara/app/src/main/cpp/platform/AndroidOpenGLFileLoader.cpp

#include "AndroidOpenGLFileLoader.h"

#include <string>

#include <android/imagedecoder.h>

namespace linguine::carbonara {

render::OpenGLFileLoader::ImageFile AndroidOpenGLFileLoader::getImage() const {

const auto filename = std::string("font.png");

auto image = AAssetManager_open(&_assetManager, filename.data(), AASSET_MODE_BUFFER);

AImageDecoder* decoder;

if (AImageDecoder_createFromAAsset(image, &decoder) != ANDROID_IMAGE_DECODER_SUCCESS) {

return {};

}

AImageDecoder_setAndroidBitmapFormat(decoder, ANDROID_BITMAP_FORMAT_RGBA_8888);

const AImageDecoderHeaderInfo* header;

header = AImageDecoder_getHeaderInfo(decoder);

auto width = AImageDecoderHeaderInfo_getWidth(header);

auto height = AImageDecoderHeaderInfo_getHeight(header);

auto stride = AImageDecoder_getMinimumStride(decoder);

auto data = std::vector<std::byte>(height * stride);

if (AImageDecoder_decodeImage(decoder, data.data(), stride, data.size()) != ANDROID_IMAGE_DECODER_SUCCESS) {

return {};

}

return ImageFile {

.width = width,

.height = height,

.data = data

};

}

} // namespace linguine::carbonara

The AndroidOpenGLFileLoader constructor requires a reference to the AAssetManager, which was easily retrieved using *app.activity->assetManager.

Now the Android version of the game contains actual text instead of white boxes! Here's the commit if you're interested, but there's really not much more to it than what I've already mentioned here.

23.5 Audio

Unfortunately it looks like OpenAL is not natively supported on Android. I could compile some software implementations of OpenAL floating around (no hardware acceleration), but the LGPL license makes it prohibitive, and it would add quite a bit of complexity to my already large codebase.

It looks like Android supports OpenSL ES all the way back to API level 9 (we're currently targeting a minimum of API level 30). Modern versions of Android (starting with API level 26) support AAudio - as far as I can tell, this is an Android-specific library (not that I've ever encountered anyone else using OpenSL ES either).

It looks like Google's preference is for developers to use their Oboe library, which dynamically utilizes AAudio on devices that support it, and OpenSL ES on devices that don't. That's a neat idea, and would have definitely been useful when AAudio was first introduced, but at this point, I might as well use AAudio directly. AAudio is already included in the Android NDK, while I would have to include Oboe in its own third_party/ directory.

AAudio

I started playing around with AAudio's C API, and at first I was really confused. There is no "engine", "driver" or "context" that you must initialize like the other audio APIs I've used so far. You simply "build" stream objects (similar to "sources" in OpenAL, or "player nodes" in Audio Engine), and they "just work" as you write data into their buffers. I'm using quotes a lot for some reason, I'll try to chill out.

The big difference is that it's our responsibility to copy data into the stream buffers, rather than handing the API a buffer that we own and allowing the framework to copy the contents of that buffer into the audio stream over time.

The result is that any audio latency is our own fault, and we should be copying data into the streams in smaller chunks. The API provides you with the size of a single "burst" - the amount of data that you can copy into the stream without experiencing any blocking. When I first started playing with the API, I was trying to copy my entire sound effect buffers into the stream at once. Not only did it affect the game's frame rate, but it affected the timing of the sound effects.

It took me a while to understand that the API intends for you to write your buffer data over time in a separate thread. Even after reading the documentation, it didn't really dawn on me that the API provides a mechanism for doing this easily. As I fiddled with things more and more, I began to appreciate the level of control that the API gives to the developer. While it's not quite as intuitive as the other audio APIs, it allows me to more finely control the number of frames between the looping music tracks.

The high-priority callbacks are called by a separate thread for each active stream, and provide the developer with a raw pointer to the underlying stream buffer. The callbacks are effectively - and constantly - asking the question: "what's next?"

The code I ended up with never even uses the AAudioStream_write() function. Instead, the class keeps track of the intended state of each stream, the play() methods reset the state for whichever stream I want, and the callbacks write the next frame of audio data based on the current state. The rest of the code isn't dissimilar to the other AudioManager implementations - create some streams, load the buffers, keep track of the least-recently-used effect stream.

Unfortunately, because the callbacks are executed on a separate thread from the game's main thread, mutation of the state variables can be flaky, with the callback executing its logic and mutating the state of the nextFrame and delayFrames fields, while the main thread tries to "reset" those fields upon requesting a new effect or song be played.

I added in some mutexes to prevent this conflict from happening, but locking the mutex on every execution of the callback seemed to destroy the performance of the game. I got rid of the mutexes and decided to add two sets of variables in each state object - a "requested" state, owned by the main thread, and the "actual" state, owned by the callback thread. The callback thread reads from the requested fields to make sure they match the actual state. This isn't truly thread-safe, but the callback thread should eventually recognize the updated variables and adjust its own state, even if it takes a couple of frames.

I'm pretty happy with the results. Honestly, the looping music logic is much more consistent with AAudio than it is on either the OpenAL or Audio Engine implementations - I might revisit those at some point to apply some of the lessons I've learned here.

The code I wrote ended up getting split into two commits - one for the initial working implementation and file loading, and another to work around the issues caused by using multiple threads. Note that I had to name the CMake library linguine-aaudio to prevent the naming collision with the actual aaudio library - both of which need to be linked by Carbonara.

While I was starting to write the next section, I found another bug that prevented sound effects from playing if the previous sound effect for that stream happened to use the same buffer. I added some new "generation" fields to the state objects to prevent that from happening, similar to the solutions we've used in the other audio implementations.

23.6 Lifecycle Management

Back when I added the lifecycle hooks for iOS, I ended up doing a lot of engine-level work to support pausing and resuming various subsystems at the same time, and had to do a bit of tweaking in the TimeManager to make sure that the game's time wasn't progressing according to real time while the game was in the background. Those changes all made sense to me, and ultimately it boiled down to how the engine worked rather than how the platform worked - the platform simply requested whether the engine should pause or resume.

Generally, Android's activity lifecycle is pretty straight-forward. There are a handful of events that are guaranteed to happen in specific orders, and different apps can listen for different events in order to make sure resources are allocated and cleaned up neatly. The NDK's native activity, however, adds a couple of events that I've never encountered before: APP_CMD_INIT_WINDOW, APP_CMD_TERM_WINDOW, APP_CMD_GAINED_FOCUS, and APP_CMD_LOST_FOCUS.

I assumed that I could just pause() the Engine in the onPause() callback, and resume() it in the onResume() callback, similar to how the iOS app behaves - seems logical right? Boy, was I wrong...

Evidently, Android completely destroys the "window" each time the app enters the background, triggering the APP_CMD_TERM_WINDOW event. A new window is created when the app is brought back into the foreground, triggering the APP_CMD_INIT_WINDOW event, which is our signal to create a new "surface" via the eglCreateWindowSurface() function. We can then bind the new surface to the existing OpenGL context using the eglMakeCurrent() function.

Unfortunately, that's not the whole story. Apparently, the context might be destroyed, in which case we will either need to restart the game entirely, or implement a mechanism to get a new context and reinitialize all OpenGL resources. The decision between the two depends on how frequently we can expect the context to be lost. According to the EGL specification:

Power management events can occur asynchronously while an application is running. When the system returns from the power management event the

EGLContextwill be invalidated, and all subsequent client API calls will have no effect (as if no context is bound).

Following a power management event, calls to eglSwapBuffers, eglCopyBuffers, or eglMakeCurrent will indicate failure by returningEGL_FALSE. The errorEGL_CONTEXT_LOSTwill be returned if a power management event has occurred.

On detection of this error, the application must destroy all contexts (by calling eglDestroyContext for each context). To continue rendering the application must recreate any contexts it requires, and subsequently restore any client API state and objects it wishes to use.

In testing on my Pixel 3 XL, these "power management events" seem to occur at least every time I turn the display off and back on. Google's own ndk-samples appear to completely re-initialize all OpenGL resources every time the window changes. I'm not sure how much I should trust these samples, because I can see a case where the window hasn't changed, but they completely reinitialize the OpenGL context because some unhandled error occurred, only to completely terminate the app immediately after.

Concept

I've always observed that other game engines decided to split their renderers into separate frontend and backend implementations. The frontend of a renderer is the parts that the engine and game code directly interact with - in our case, the Cameras and Renderables that get allocated, as well as the RenderFeatures that each Renderable contains. The backend is responsible for translating the interactions with the frontend into API-specific implementations.

In our engine, our frontend is the Renderer base class, and the backends are each subtype of that base class (OpenGL and Metal). In a traditionally-structured engine containing separate frontend and backend implementations, re-initializing all of the graphics API resources is just a matter of destroying the old backend and creating a new one in its place. Unfortunately, in our engine, those two concepts are coupled into a single object, and destroying the Renderer entirely means destroying all of the Cameras and Renderables that the game code relies on, even though those objects have nothing to do with the graphics API backend.

As a proof-of-concept, I added some virtual init() and destroy() methods to the Renderers and FeatureRenderers, and moved all of the initialization and destruction logic into those methods, with the constructors and destructors simply invoking the new methods. I added new hooks for the lifecycle events into Carbonara's ApplicationAdapter, and invoked the destroy() and init() methods on the current Renderer whenever a EGL_CONTEXT_LOST error was detected. If the context is not lost, we simply create a new surface and bind it to the existing context via eglMakeCurrent() as usual.

This totally works, albeit with some expected hiccups during state changes, but I absolutely hate it. This is a lesson in composition over inheritance - it would be much cleaner if the renderer contained a std::unique_ptr to a backend, which could be swapped out at any time. We have some refactoring to do.

The Backend

I created a new RenderBackend interface in Linguine, and converted the Renderer implementations into RenderBackends instead. The Renderer frontend still exists, forwarding many of its calls to the whichever RenderBackend is currently set, though the frontend still handles the creation of Cameras and Renderables entirely.

I brought the old TestScene up to modern standards by moving all of its composition logic into an init() method - the unused class had been rotting this whole time, but it was the only old scene that I decided not to delete entirely. It does a great job at validating a lot of the core features of our renderer, including transformation matrices, uniforms, and entity selection.

The actual changes aren't all that interesting, even though there were a lot of touch points. Other than the RenderBackends having to call resize() on their own FeatureRenderers (an arbitrary choice I made that I'm only now questioning), the actual implementation of the backends was basically untouched!

The biggest thing to note is that the RenderBackends no longer have direct access to the frontend's Viewport, Cameras, or Renderables, since they no longer inherit the protected methods used to retrieve them. The frontend passes references to any relevant objects into the backend whenever necessary.

- The

Renderables are provided to theFeatureRenderers as they always were, and eachFeatureRenderercan decide if it's relevant or not. - The

Cameras are passed into the backend'sdraw()method, so that the backend can iterate over them appropriately. - The

Viewportwas never really a backend thing - it was more intended for the game code to have access to the current screen dimensions in an immutable way. Instead, each backend (and feature renderer) must cache the current dimensions wheneverresize()is called, if they care to remember.

The most interesting change to come out of this massive commit is how each platform must instantiate the Renderer, in order to provide it to the Engine:

carbonara/app/src/main/cpp/ApplicationAdapter.cpp snippet

auto renderBackend = render::OpenGLRenderBackend::create(std::make_unique<AndroidOpenGLFileLoader>(*app.activity->assetManager));

auto renderer = std::make_shared<Renderer>(std::move(renderBackend));

Okay, maybe it's not all that interesting - but now I can do this:

carbonara/app/src/main/cpp/ApplicationAdapter.cpp snippet

auto& renderer = _engine->get<Renderer>();

...

auto renderBackend = render::OpenGLRenderBackend::create(std::make_unique<AndroidOpenGLFileLoader>(*app.activity->assetManager));

renderer.setBackend(std::move(renderBackend));

Setting a new backend will exchange the underlying std::unique_ptr, destroying the previous backend along with all of the memory it allocated. The new backend will create all new resources based on the new OpenGL context, and all of the FeatureRenderers of the new backend will receive onFeatureChanged() updates for all existing Renderables as if they had just been created.

Obviously this change required making changes to all of the platforms. Pesto was the most untouched, simply switching out the old style for the new one. Carbonara's ApplicationAdapter now receives events from the event handler in main.cpp. Scampi received a bit of code cleanup, but nothing that really changed what it was doing before.

Alfredo, on the other hand, changed a lot more, restructuring the unnecessary code path that resulted in the weird autoDraw in the old MetalRenderer, so that the renderer could decide whether it should actually start rendering, or forward the call to the MTK::View, which would result in the AlfredoViewDelegate receiving a drawInMTKView: call, which would then forward it back to the renderer. Since the new MetalRenderBackend no longer has direct access to the Cameras, it became impossible for the MTK::View to trigger the draw logic directly. Instead, the AlfredoApplicationDelegate continuously calls draw() on the MTK::View, which triggers the drawInMTKView: in the AlfredoViewDelegate. The AlfredoViewDelegate simply tick()s the entire Engine, resulting in the Engine's old run() method being completely unused, so I deleted it.

I decided that I would prefer if the main() method were responsible for the loop, so I made some more changes to make that happen, and fixed some more of Alfredo's weird window management behavior. The macOS app has always been nothing more than a development platform for me, rather than something I intend to release, so I've been pretty bad about keeping up with it. I'm glad to have cleaned it up a bit, but I've probably sunk too much time into it.

23.7 Persistence

Hopefully this will be a bit easier than the previous sections. Unfortunately, there are no official examples of persisting app settings from native code, and the template project makes no attempt to do so either, so I'm on my own with this one.

I dug around the NDK's headers in the android/ directory, finding no evidence of functions that persist "settings" or "preferences" anywhere. My Google searches indicate that the only way to achieve this is with some more JNI code. The SaveManager is invoked sparingly, and only during non-critical moments, so the added latency of communicating over JNI isn't such a big deal in this case.

I created a new SharedPreferences class in the jni/ directory. Unlike the JNI code we wrote for the SwipeListener, which allows Java to call into the C++ code, this new class will call Java methods from C++. My goal is for the SharedPreferences C++ class to mirror the interface of its Java counterpart, though I think I'll limit the scope of the mirror to contain only the methods that I care about using.

Like I mentioned earlier, this ain't my first rodeo with JNI, so whipping up these changes wasn't particularly difficult, though I did encounter some bugs along the way.

The SharedPreferences constructor uses the android_app to retrieve a JNIEnv for the current thread. The android_app already contains a jobject representing the current instance of the Java GameActivity, and we can use that jobject to retrieve the jmethodIDs for various methods, including the activity's getSharedPreferences() method. We can actually invoke that method with the JNIEnv's CallObjectMethod() method, which returns a jobject representing the actual SharedPreferences Java instance. We can take that jobject and repeat the cycle for whichever Java methods we want to call.

In the constructor, we go ahead and get the SharedPreferences instance so that we can cache the jmethodIDs of the methods we care about: edit(), getBoolean(), and getInt(). Our C++ class contains the same method signatures, which can use the various "call" methods (CallObjectMethod(), CallBooleanMethod(), and CallIntMethod()) to invoke those functions and return their values to the C++ code.

In the case of the edit() method, I've created a subclass named Editor that is based upon a Java interface of the same name. The Editor's constructor follows the same process, using the JNIEnv to cache jmethodIDs for any relevant methods - namely, putBoolean(), putInt(), and apply().

Outside of a few typos and copy/paste errors that were easily discoverable, I made a couple of mistakes during my first iteration. First, I was incorrectly passing strings as method parameters. I had assumed I could just construct a jstring using the std::string's data(). The resulting error message informed me that I was using a variable after it had already been freed, which is why you might notice a random memory address in a comment in the above commit (and a followup commit removing the comment). Apparently, I have to use the JNIEnv's NewStringUTF() method instead, which was easily found from some light Googling. Additionally, the Java version of the Editor interface's put*() methods return a reference to the same Editor instance, so that the programmer can chain the call like a builder pattern. I wanted to replicate the functionality in C++, so I decided to check the resulting jobjects (from the Call*Method() method) to the existing cached jobject, and throw an exception if they didn't match. Again, the JNIEnv has a special method for doing just that (using the IsSameObject() method).

The biggest annoyance with using JNI is just understanding how the Java method signatures must be formatted. I mostly used the documentation to figure this out, but also leaned on my lengthy Java experience to know that things like subclasses must be delimited by a $ symbol (such as Landroid/content/SharedPreferences$Editor;).

23.8 App Icons

Much like Xcode, Android Studio provides its own app icon generator, which appears when you try to create a new "Image Asset" in the res/ directory. It provides some options that allow you to define separate foreground and background layers, but there's really not much more to say about it.

Android apps also contain a splash screen that appears while the app is starting up. By default, the splash screen displays the app's icon in the center, with a gray background filling the rest of the screen. I created a new "theme" in order to set the splash screen's background to a shade of blue equivalent to the game's Secondary Palette color.

Well that was easy.

23.9 Publishing Early Access

The only thing left to add to Carbonara is leaderboard support, but similar to Apple's Game Center, I can't actually integrate Google Play Games into the app until I get the app listing created in the Google Play Console.

I created a Google Play developer account for my company while I was starting to ponder the possibility of porting the game to Android, in order to skip any sort of hold ups in the process when I finally got the game into a playable state. Now that everything seems to be working, it's time to upload the first test release to the portal.

Google apparently requires a lot more setup in order to publish an app for "closed testing" (the equivalent of Apple's "external testing"), including screenshots, a privacy policy, and a bunch of filled-out questionnaires regarding target audiences and monetization. At this point, I just have to assume I'm releasing the app for free with no ads, so I answered all the questions accordingly.

I made some minor changes for the release build. I declared a minimum version of OpenGL ES so that unsupported devices aren't able to download the app from the Google Play Store at all. I also allowed debug symbols to be included in the final app bundle so that crash reports might be a little more helpful. As I understand it, the actual debug symbols never make it to the end-user's device; rather, the crash is uploaded to Google, and the Google symbolizes the crash dump before providing the final stacktrace to me.

At this point I'm just waiting for Google's approval, which could take up to a week.

23.10 The Leaderboard

While I wait, I think I have enough information to get started implementing a leaderboard using Google Play Games Services.

There appear to be two versions of the Google Play Games Services SDK floating around: the original SDK requires an explicit login flow, while the v2 SDK automatically logs the player in when the game launches. The behavior of the v2 SDK most closely matches that of the iOS app experience, so it would be nice if I could use that.

Unfortunately, at the time of this writing, the native version of the v2 SDK only supports signing in - not the ability to interact with specific features such as leaderboards. While that's a bit of a bummer, the code example seems like it's just using JNI to interact with the Java SDK anyway, so I guess we can just do the same.

I began following the instructions for the Java SDK, and quickly moved into the leaderboard instructions. I spent way too much time trying to understand why Google's own instructions weren't working for me. After giving up for the day and revisiting it later, I figured out that there were multiple problems.

First, the Google Play Games app must be installed on the device. I'm sure the app is pre-installed on many devices these days, but I haven't used Android in a while. As far as I know, Game Center is built into iOS and can't be "uninstalled", so this isn't a problem on Apple devices.

The second problem was more difficult to determine, but Google's troubleshooting page helped me figure it out. When I was setting up the app in the Google Play Console, I decided to allow Google to manage the signing key of the app using Play App Signing. As I followed the instructions, I was required to generate an OAuth 2.0 client ID, which requires the SHA1 fingerprint of the app's signing key.

This is where I screwed up. My OAuth client was configured using the fingerprint of the Play App Signing key, which isn't actually used to sign the app until the game is uploaded to the Google Play Console. Locally, the app is signed using entirely different keys. The debug key, used by default, is generated automatically by Android Studio. I've been using my own "release" key that I generated for the purposes of uploading the app to the Google Play Console, which Google refers to as the "upload" key, and even shows you the fingerprint of this separate key within the console.

In order to permit both the Play App Signing key and the upload key to interact with Google Play Games Services, I had to configure another OAuth 2.0 client, and add both clients as separate "credentials" within the "Play Games Services configuration" page of the Google Play Console.

Now that I've wasted several days on getting the sample code to work, I can start on the actual hard part!

Callbacks with JNI

We've already written code to both Java-to-C++ and C++-to-Java interactions over JNI, but this is going to be a little different (and something I've never done before).

Our LeadboardManager interface was designed around the use of callbacks, because it seemed obvious that these calls would require asynchronous communication over the internet. The Google Play Services SDK is also oriented around callbacks for the same reason.

Obviously we can write a C++ method that calls the Java method to kick off the request, but how do we return the result back to C++ in such a way that the response is tied to the original request, in order to trigger the callbacks provided by the game code?

The SwipeListener functions were designed to be global triggers that anyone could listen to. We could theoretically create global triggers for all the Google Play Services methods that we intend to use, perhaps assigning some sort of ID to them so that the original caller could associate each response back to its original request.

Another thought comes to mind: if we could pass an arbitrary ID to Java that just gets returned back to C++ for the sake of association, then why not just pass a pointer back and forth? We could allocate an object on the heap in C++, pass the pointer to Java as a long, and have Java return the pointer back to C++. The C++ JNI function could act on the object using other information provided by Java (presumably firing the C++ callbacks), and then delete the object from the heap when it's done!

We've actually seen this pattern in several places throughout our journey: for example, Emscripten callbacks all provide an arbitrary pointer to "user data" - a pointer which we ourselves provide when registering the callback. Within the callback, we can assume the pointer is the same as the one we originally provided and that we can safely cast it to that type. We've also seen this pattern show up in the AAudio callbacks, as well as the native Android game-activity event loop. In all of those examples, the pattern was established by the library that we were utilizing, but this time we will be the ones implementing it!

After a lot of typing, here is what I managed to whip up. I created a new Leaderboards C++ class in the jni/ directory, and a Kotlin counterpart interface in the leaderboards package. The two classes have very similar interfaces, since they are meant to interact closely with one another. Submitting a new score is the simplest case, so I'll try to break that down a bit, without boring you with too much JNI details.

carbonara/app/src/main/cpp/jni/Leaderboards.cpp snippet

struct SubmitScoreCallbacks {

std::function<void()> onSuccess;

std::function<void()> onError;

};

void Leaderboards::submitScore(std::string leaderboardId, int64_t score,

std::function<void()> onSuccess,

std::function<void()> onError) const {

auto callbacks = new SubmitScoreCallbacks { std::move(onSuccess), std::move(onError) };

_env->CallVoidMethod(_leaderboards, _submitScoreMethod,

_env->NewStringUTF(leaderboardId.data()), score,

reinterpret_cast<jlong>(callbacks));

}

The first step simply invokes the Java method from C++, providing all the parameters you might expect, along with a pointer to a new SubmitScoreCallbacks struct instance, which contains the callbacks that will eventually get invoked. The pointer is casted to a jlong so that Java can just treat it as a long. Note that the struct is allocated on the heap using new, but nothing ever deletes it, nor are there any smart pointers managing that memory - outside of the ArchetypeEntityManager, I've avoided leaving unmanaged memory lying around like that.

carbonara/app/src/main/java/com/justindriggers/carbonara/leaderboards/LeaderboardsAdapter.kt snippet

override fun submitScore(leaderboardId: String, score: Long, userData: Long) {

leaderboardsClient.submitScoreImmediate(leaderboardId, score)

.addOnCompleteListener(SubmitScoreCompletionListener(userData))

}

carbonara/app/src/main/java/com/justindriggers/carbonara/leaderboards/SubmitScoreCompletionListener.kt

package com.justindriggers.carbonara.leaderboards

import com.google.android.gms.games.leaderboard.ScoreSubmissionData

import com.google.android.gms.tasks.OnCompleteListener

import com.google.android.gms.tasks.Task

internal class SubmitScoreCompletionListener(

private val userData: Long

) : OnCompleteListener<ScoreSubmissionData> {

override fun onComplete(task: Task<ScoreSubmissionData>) {

if (task.isSuccessful) {

onSuccess(userData)

} else {

onFailure(userData)

}

}

private external fun onSuccess(userData: Long)

private external fun onFailure(userData: Long)

}

The LeaderboardsAdapter Kotlin class (which implements the Leaderboards interface) contains a submitScore() method, which gets invoked by the C++ JNI code above. This method calls the submitScoreImmediate() method from the Google Play Games Services SDK, and registers a callback that contains the user data pointer. This callback contains the native methods onSuccess() and onFailure() - one of which will get invoked by the onComplete() method once the asynchronous task is completed.

carbonara/app/src/main/cpp/jni/Leaderboards.cpp snippet

JNIEXPORT void JNICALL Java_com_justindriggers_carbonara_leaderboards_SubmitScoreCompletionListener_onSuccess(JNIEnv* env, jobject thiz, jlong userData) {

auto callbacks = reinterpret_cast<SubmitScoreCallbacks*>(userData);

callbacks->onSuccess();

delete callbacks;

}

JNIEXPORT void JNICALL Java_com_justindriggers_carbonara_leaderboards_SubmitScoreCompletionListener_onFailure(JNIEnv* env, jobject thiz, jlong userData) {

auto callbacks = reinterpret_cast<SubmitScoreCallbacks*>(userData);

callbacks->onError();

delete callbacks;

}

These C++ methods are the JNI receivers for the native methods defined above - one for the onSuccess() method, and the other for onFailure(), both receiving the user data pointer. Each method casts the user data pointer back to the SubmitScoreCallbacks type, invokes the appropriate callback, and most importantly, deletes the struct object from the heap to prevent memory leaks.

The submitScore() is the simplest because the callbacks contain no parameters. The loadTopScores() method is much more complex because we must transfer an array of score results over JNI and parse the results into something that makes sense in C++. I'm not going to go into detail about it here, but you are free to dig into the implementation if you're curious.

carbonara/app/src/main/cpp/platform/AndroidLeaderboardManager.cpp snippet

void AndroidLeaderboardManager::submitScore(int32_t score, std::function<void()> onSuccess,

std::function<void(std::string reason)> onError) {

_leaderboards.submitScore(LeaderboardId, score, onSuccess, [onError]() {

onError("Error");

});

}

Finally, the AndroidLeaderboardManager simply constructs a Leaderboards object and uses it to make all of its calls, as if it had access to a C++ version of the Google Play Games Services SDK! The signature of the onError callback provided is different from the one required by the Leaderboards class, so we simply wrap it. I could add some more detailed error codes, but I'm just going to leave it like this for now.

As I was debugging through the code during testing, I noticed a couple of errors I had made in my JNI code before. First, we're not supposed to store jobjects directly, since the references returned are considered "local" references. Instead, we should call the NewGlobalRef() from the JNIEnv so that JNI can do some reference counting. When we're done with the reference, we call the DeleteGlobalRef() to clean it up. I updated a few spots in the existing JNI code with that change, and made sure to apply the pattern to the new JNI code as well.

The debugger actually pointed another error out to me directly: our Editor's apply() method was incorrectly using the CallObjectMethod() method instead of CallVoidMethod(). I made that change and the debugger is much happier now.

23.11 Cleanup

Before I wrap this chapter up, I want to tackle a couple of bugs I've been noticing.

Inconsistent Looping Music

The biggest issue I've noticed is that the looping music seems inconsistent. Sometimes, the "B" track never plays at all, so the game is pretty silent until the scheduled "A" track comes back around. It's really offputting, and definitely not intentional.

I was able to read through the code and quickly identify at least one issue: we never actually reset the "A" track's requestedDelayFrames to 0, so a new play() request might have a delay from a previous play() request.

Unfortunately, that wasn't actually causing the issues I was experiencing. After a lot of logs and debugging, I discovered that the "B" track's requestGeneration wasn't being incremented until after its AAudioStream_requestStart(). Because the callback thread is a high priority, the track was actually starting and stopping before the requestGeneration was getting incremented!

Login Improvements

In the LeaderboardsAdapter, there is actually a chance for the isAuthenticated() callback to return successful even though the player was not authenticated. I made a change to verify the state of the AuthenticationResult embedded within the callback parameter, and added a conditional to skip the player ID request if the login failed.

23.12 Looking Forward

As I was fixing the bugs above, Google finally approved the "closed testing" version of the game. I submitted it on Monday, and it is now Friday, so I guess it took them about 4 business days to knock it out. Unfortunately, I now have a new build submitted containing the leaderboard and bug fixes, so I'm stuck waiting once more. I went ahead and promoted the latest version to the "open testing" track, which will allow anyone to install the "early access" version of the app directly from the Play Store.

Once an Android fanboy, it pains me to admit that developing native applications for Android is a huge pain - especially when compared to the relative ease of native iOS development (Objective-C sucks, but at least I don't have to use a JNI-equivalent).

There are still some improvements I'd like to make to the game and engine, but the Android-specific code is in a complete-enough state to conclude this chapter.